Discover how LiDAR works, its laser measurement principle, and its decisive role in the navigation and safety of autonomous drones.

In summary

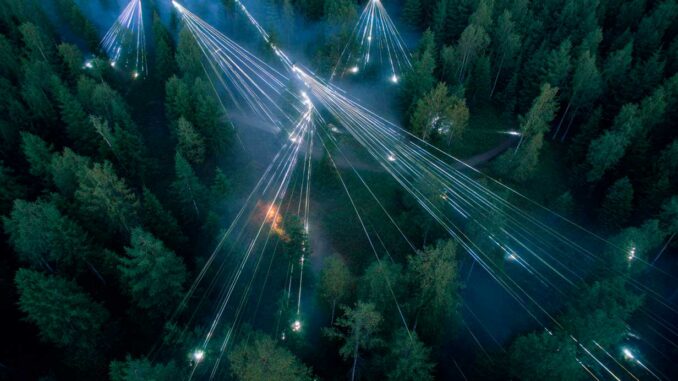

LiDAR (Light Detection and Ranging) is a laser remote sensing technology that measures distances by calculating the time of flight (ToF) of light pulses. Mounted on autonomous drones, it combines a laser sensor, a GNSS system, and an IMU to produce 3D point clouds with centimeter-level accuracy. Unlike photogrammetry, it can penetrate vegetation and capture ground relief, making it crucial for mapping, surveying, forestry, and disaster management. In aviation and autonomous drones, it is also used for obstacle detection and navigation in complex environments. Recent advances, including semiconductor LiDAR and lightweight systems for micro-drones, are further expanding its applications, from autopiloting to infrastructure inspection, and enhancing the reliability of drones in critical missions.

The technical principle of LiDAR and time of flight

LiDAR is based on the time of flight (ToF) of a light signal. The sensor emits millions of very short infrared laser pulses—typically in the order of a few nanoseconds—directed toward the ground or obstacles. Light travels at a constant speed of approximately 299,792 km/s, and the system records the time elapsed between emission and reflected return.

The distance is calculated using the following formula:

d = (c × Δt) / 2, where c is the speed of light and Δt is the round-trip travel time.

By repeating this operation several hundred thousand times per second, LiDAR builds a very dense cloud of 3D coordinates. Some modern sensors exceed one million measurements per second, with accuracies of 2 to 3 cm in the field.

This principle is particularly well suited to drones, as it is not affected by ambient light and also works at night. In addition, the low-divergence laser beam allows fine details to be measured, such as electrical cables or terrain irregularities.

Integration with positioning and orientation systems

For a distance measurement to be usable, the position and orientation of the sensor at the moment of each pulse must be known with precision. On an autonomous drone, LiDAR is therefore associated with two key elements:

- A GNSS receiver (often GPS or Galileo) that provides location in latitude, longitude, and altitude with an accuracy of up to a few centimeters in RTK (Real-Time Kinematic) mode.

- An Inertial Measurement Unit (IMU) that measures acceleration and rotation to determine the roll, pitch, and yaw of the platform in real time.

By combining this data, each laser measurement is placed in a real geographic coordinate system, producing a cloud of georeferenced points.

These point clouds are then used to generate Digital Terrain Models (DTM) and Digital Elevation Models (DEM) that reproduce both the ground surface and the objects present (vegetation, buildings, infrastructure).

The role of LiDAR in mapping and topographic analysis

One of the strengths of airborne LiDAR is its ability to partially penetrate vegetation. When a pulse encounters a tree, part of the energy is reflected by the canopy (first return), while another part continues on its path and reaches the ground (last return).

By recording several successive returns for the same pulse, LiDAR can reconstruct both the profile of the vegetation and the topography of the underlying ground.

This capability is crucial for:

- Forest management, by calculating the height, volume, and density of forests.

- Geology and flood prevention, by accurately modeling the terrain hidden by vegetation.

- Urban planning and civil engineering, by providing very high-resolution 3D maps for the design and monitoring of construction sites.

A LiDAR survey carried out at an altitude of 120 m by a drone equipped with a 32-channel sensor can cover several hectares in a matter of minutes, with a density of several hundred points per square meter.

The contribution of LiDAR to the navigation and safety of autonomous drones

Beyond mapping, LiDAR plays a central role in autonomous navigation and collision avoidance. Unlike radar or a simple camera, it provides an accurate and instantaneous three-dimensional perception of the environment.

Drones use it to:

- Assess ground distance during automatic landing or terrain following phases.

- Detect and avoid obstacles (buildings, trees, cables, poles) while flying in urban areas or forests.

- Maintain stable flight at low altitudes, even in low light or light fog conditions.

Some solutions combine LiDAR with SLAM (Simultaneous Localization and Mapping) algorithms, allowing the drone to map its environment in real time while locating itself within it. This is essential for search and rescue missions in areas without GPS signal or for inspecting complex infrastructure such as bridges and wind farms.

The performance and technical limitations of LiDAR systems

LiDAR sensors are distinguished by their wavelength, transmission power, pulse frequency, and scanning field. Airborne systems most often use lasers close to infrared (1,064 nm) or green (532 nm) for bathymetric measurements in aquatic environments.

Typical performance includes:

- An effective range of 100 to 300 m for systems mounted on small drones.

- Vertical accuracy of 3 to 5 cm for low-altitude flights.

- Point density of several hundred thousand to several million per second.

However, the technology also has limitations:

- Sensitivity to weather conditions (dense fog, rain, snow) that scatter or absorb the beam.

- Energy consumption, an important factor for drone autonomy.

- The high cost of high-performance sensors, although this is falling with mass production and the arrival of solid-state LiDAR.

Recent advances and developments in LiDAR for drones

The miniaturization of sensors and the rise of solid-state LiDAR are transforming its integration into drones. These models, which have no moving parts, are lighter, more robust, and consume less energy. They enable the equipping of micro-drones for missions inside buildings or in confined environments.

Advances in processing algorithms, combined with the increasing power of embedded processors, now allow real-time fusion between LiDAR, camera, and GNSS/IMU data. This improves BVLOS (Beyond Visual Line of Sight) navigation and accurate tracking of flight paths in urban areas.

The integration of LiDAR into Detect and Avoid (DAA) systems is becoming standard for unmanned aviation, paving the way for autonomous air taxis and urban air logistics.

The strategic impact of LiDAR in aviation and aerial robotics

Aerial LiDAR is not just a mapping tool. It is establishing itself as an essential building block of advanced aerial robotics. Its role is twofold: to provide reference topographic data for mission planning and to ensure the dynamic safety of drones in flight.

In the agricultural sector, it promotes precision farming, optimizing irrigation and assessing crop conditions. In disaster management, it is used to quickly map disaster areas and guide rescue teams. In the military, it contributes to tactical reconnaissance and the guidance of autonomous systems.

The convergence of LiDAR, artificial intelligence, and 5G connectivity will further increase its influence, enabling drones to share and analyze vast amounts of collected geospatial data.

A technology set to redefine the standards of autonomous aviation

LiDAR has evolved from a scientific measurement tool to a strategic component for unmanned aviation. By improving the accuracy and responsiveness of on-board perception, it brings autonomous drones closer to the requirements of large-scale commercial operations.

Challenges remain, particularly in terms of reducing weight, cost, and sensitivity to atmospheric conditions, but industry trends indicate that future autonomous aircraft—whether logistics drones, air taxis, or hybrid surveillance vehicles—will rely on an integrated combination of LiDAR and other sensors.

This evolution is transforming the way drones interact with airspace, enabling safe and smooth navigation in increasingly complex environments.

War Wings Daily is an independant magazine.