Armed and autonomous machines are entering the battlefield. Real capabilities, algorithmic decisions, legal frameworks, and responsibilities.

The fundamental concept of lethal autonomous weapons

Lethal autonomous weapons (LAWs), often referred to as killer robots, are systems capable of selecting a target and applying force without immediate human intervention. Once activated, engagement can be triggered based on a target profile, sensors, and algorithms. Three categories structure the analysis: “human in the loop” (authorization required to fire), “human on the loop” (supervision with the possibility of cancellation), and “human out of the loop” (no intervention during engagement). This typology raises the central question: what level of human control must be guaranteed to ensure that autonomous weapons in modern warfare remain lawful and ethical?

The line between automatic, autonomous, and intelligent

A naval close-in defense system that tracks an incoming missile and fires in automatic mode is not necessarily “intelligent” in the strong sense. It applies deterministic rules (speed, cap, distance) with a reaction time of a few seconds. Autonomy adds the ability to perceive, classify, and decide in an uncertain environment, sometimes through machine learning. This shift affects critical functions (detection, identification, target selection, firing). Debates on killer robots and military ethics focus precisely on these functions.

Families of systems already deployed

Naval point defense systems

The Phalanx CIWS automates search, tracking, threat assessment, and firing. The system can engage without additional orders in automatic mode, with a typical engagement range of approximately 1.85 km (1 NM) and a rate of fire of approximately 4,500 rounds/minute. This type of defense is an example of supervised autonomy designed for the final seconds of an interception, when human response time is insufficient. Autonomous weapons and international security see this as a case where the machine must decide quickly, but remains integrated into a set of rules of engagement and supervision.

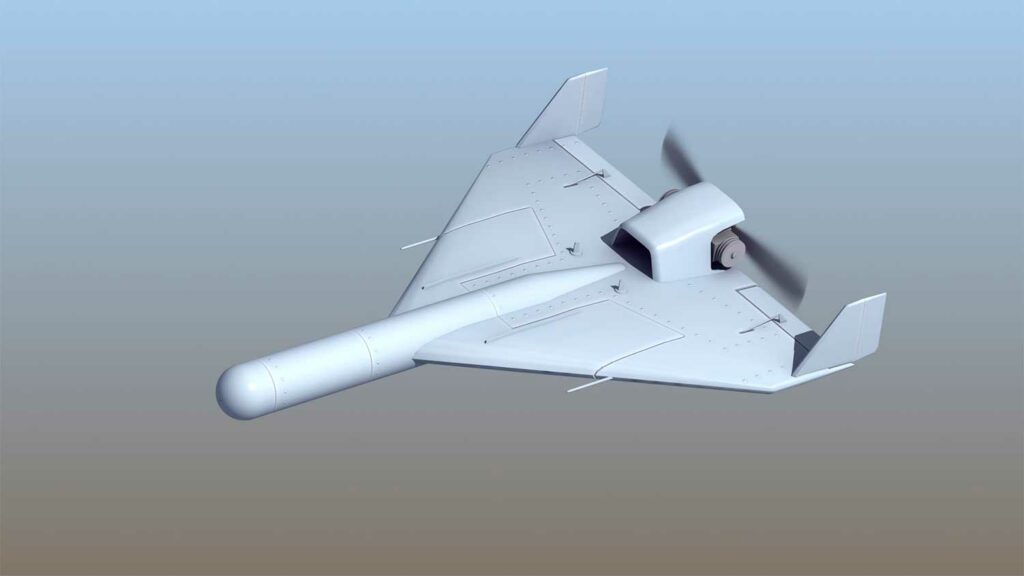

“Smart” loitering munitions

Loitering munitions combine surveillance and strike capabilities. The IAI Harpy autonomously searches for radar emitters in a defined area; it can loiter for several hours and dive on the detected signal. The IAI Harop, a more recent variant, is most often operator-controlled via an optronic payload, with several hours of endurance and an extended range depending on the version. These systems illustrate killer robots on the battlefield when target classification is delegated to the machine (Harpy against radars), and the operator in the loop when visual confirmation is human (Harop).

The tactical missile drone

The Switchblade 300/600 from AeroVironment remains the archetype of the “human in the loop” concept. The operator sees the target and authorizes or aborts the attack until the last second. The weapon is portable and designed for close support with an appropriate payload. This is a useful counterexample: autonomous lethal weapons and artificial intelligence do not always mean firing without humans; many current applications favor human decision-making at the point of fire.

The “loyal wingman” attack drone

Vectors such as the MQ-28 Ghost Bat (Boeing) and XQ-58 Valkyrie (Kratos) are exploring human-machine cooperation: fighter escort, advanced reconnaissance, jamming, and manned-unmanned teaming. The goal is deterrence through mass and flexibility, without necessarily removing humans from lethal decision-making. Autonomous weapons and the arms race are tangible: tests, flights, and demonstrations are multiplying.

Uses, companies and intended applications

Israel Aerospace Industries (IAI) and Elbit Systems dominate loitering munitions and sensors. STM (Turkey) has developed Kargu-2, equipped with ATR (automatic target recognition) mentioned in UN reports on Libya in 2020-2021. In the US, AeroVironment produces Switchblade; Shield AI is developing Hivemind, an autonomous pilot for flight and swarms in environments without GPS or communications; Anduril provides multi-domain systems powered by Lattice for counter-UAS and site security; Palantir distributes Maven aggregation and decision support suites. These players are targeting ISR, artillery support, SEAD/DEAD against radars, counter-drone, and cooperation with manned aircraft. Killer robots and military strategy are part of a logic of saturation and reduced unit cost.

Algorithmic decision-making: from sensor to shot

The decision chain of an ALA includes: sensor fusion, detection, classification, threat assessment, selection, and engagement. Algorithms (vision, radar, radio frequency) establish a confidence score and compare it to a threshold set by doctrine. Best practices include:

- Geolocation and dynamic prohibitions (no-strike lists).

- Engagement windows limited in space (square kilometers) and time (minutes).

- Human supervision with stop button and abort at any time.

- Verification & Validation (V\&V) and operational tests against decoys, jamming, weather.

These safeguards aim to reduce automation bias, classification errors, and collateral damage, requiring Article 36 legal reviews prior to deployment. Lethal autonomous weapons and human rights require anticipating the traceability of decisions: logs, mission configuration, model versions.

Operational and strategic consequences

Autonomous weapons in modern warfare promise cycle times (sensor-to-shooter) that are several orders of magnitude shorter, increased surveillance endurance (flight hours), and dispersion of human risk. Loitering munitions with 9 hours of endurance can saturate an area, while a naval CIWS naval weapon system can deal with a threat in seconds. This acceleration in tempo can overwhelm command loops and lead to escalation incidents, both at sea and on land. The other major effect is economic: the proliferation of inexpensive vectors encourages saturation and swarms that require permanent active defenses.

For conventional forces, autonomy is transforming practices: permanent anti-drone patrols, increased use of decoys, mobility and reduced signature of headquarters. For small armies, the combination of “drones + AI + loitering munitions” offers asymmetric leverage. Lethal autonomous weapons and deterrence then come into tension: numerical mass can dilute the traditional deterrent effect of rare platforms.

The reality on the ground: what really exists

Several autonomous systems have existed for years for defensive missions: CIWS and land-based C-RAM systems automatically engage rockets, drones, or missiles when they enter an engagement envelope. In the SEAD domain, Harpy is designed to detect and strike radars without human confirmation in real time, while Harop favors the operator to validate the target. Models such as KUB-BLA and Lancet claim automatic reconnaissance and autonomous flight capabilities on programmed routes. Nevertheless, most forces retain a human in the loop for firing decisions, precisely to remain within the framework of international conventions and rules of engagement.

Applicable law and responsibility

The existing framework

International humanitarian law (IHL) applies in full to ALAs: distinction, proportionality, and precautions. Many states conduct legal reviews under Article 36 of Additional Protocol I (1977): an ex ante assessment of the lawfulness of weapons and their conditions of use (environments, targets, settings). For autonomous weapons and state responsibility, IHL links use to chains of command; individual criminal responsibilities may arise from reckless deployment decisions or use contrary to the rules. Killer robots and international conventions are giving rise to proposals for new instruments to clarify “meaningful human control.”

National policies and state positions

The US DoD requires that systems allow commanders and operators to exercise appropriate levels of human judgment on the use of force, with rigorous V&V and authorizations at the highest level for the most sensitive developments. The United Kingdom insists on governance that ensures compliance with IHL for all weapons with autonomous functions. France considers fully autonomous lethal systems to be contrary to the principle of human control and promotes sufficient control and accountability throughout the life cycle. Lethal autonomous weapons and international law are therefore enriched by soft law pending a global standard.

UN dynamics

In 2024, the United Nations General Assembly adopted a resolution on LAW with 166 votes in favor, 3 against and 15 abstentions, opening a new forum for discussion in New York. The Secretary-General calls for a legally binding instrument by 2026, combining prohibitions (systems without human control or targeting individuals as such) and regulations (usage parameters, supervision, traceability). This path complements the work of the CCAC (CCW) and its Group of Governmental Experts. Killer robots and global governance are moving towards a two-tier model: prohibiting certain systems and regulating others.

Technical responsibility: how to make it credible

For autonomous weapons and European defense, as for others, verifiable accountability requires:

- Versioned technical files (training data, parameters, tests).

- Traceability of decisions (event logs, mission settings, geotagging).

- Contextual limitation (exclusion of populated areas, restricted target profiles).

- Independent assessments and joint exercises under jammed conditions.

Several organizations (ICRC, NGOs) recommend banning systems without meaningful human control and those targeting individuals, and strictly regulating all others (configuration, supervision, operating environment).

The real influence today: caution and asymmetries

In the field, the real influence of ALAs lies less in “fully autonomous” firing than in the speed and persistence offered by partial autonomy. A swarm of small ISR drones combined with loitering munitions is changing the geometry of combat: continuous observation, multi-sensor detection, and rapid counterattack. In the Red Sea, the rise of automatic interceptions against missiles and drones illustrates how defensive autonomy has become essential. Conversely, offensive autonomy against people remains politically and legally controversial, and operationally risky in densely populated environments.

A possible trajectory for the next five years

It is likely that lethal autonomous weapons and the robotization of armies will progress along three lines: active defense (anti-missile/anti-drone), smarter loitering munitions that are still supervised, and collaborative human-machine interaction at the operational level. The normative debate, led by the United Nations, should result in a dual framework of targeted prohibitions and detailed regulations (human control, recording, audits). The key will be proof of control: armies capable of certifying the who, when, and how of a lethal decision will gain legitimacy as well as effectiveness.

War Wings Daily is an independant magazine.